As the end of 2022 approaches, Covid has receded into the background, but it nonetheless continues to have a lingering baleful impact on our lives. We lost members of the running community through untimely death. Others have struggled with debilitating symptoms of Long Covid. After the widespread cancellation of events in 2020 and the postponement of major Spring marathons to the Fall in 2021, the majority of events returned to their usual place in the race calendar in 2022. The disruption of training programmes has cast some dark shadows, but in few instances, focused some shafts of light on the distance running scene. This post examines a few of those shadows and also some of the shafts of light. I hope that these observations will provide a foundation for a series of posts in coming year in which I plan to return to two related themes that I have addressed in my blog in past twelve years: optimizing longevity as a runner, and the role of mental processes in running.

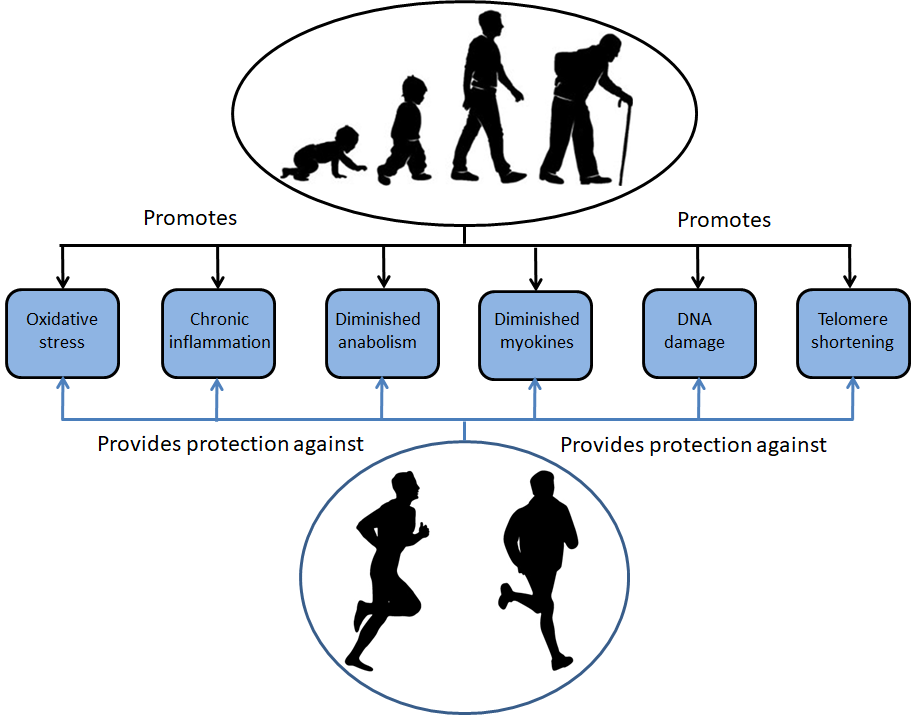

In August 2014, I produced a series of posts addressing the lessons to be gleaned from the training and racing of seven elite elderly marathoners, all of whom had set age-group world records for the marathon at age 60 or greater, and in addition, had sustained elite levels of performance for at least 15 years. One of the seven was John Keston, who, in the mid-1990’s, appeared likely to be the first septuagenarian to break the three-hour barrier for the marathon. In his seventy-first year, he ran 20 marathons, but never quite achieved the elusive sub 3-hour goal. At the end of that year, he wryly acknowledged that running 20 marathons in a year was probably not the best way to achieve his goal. The following year, he set an M70-74 world record time of 3:00:58. Seven years later, in 2003, 72 year-old Ed Whitlock did finally smash the 3 hour barrier with a time of 2:59:10. A year later, at age 73, Ed set an incredible M70-74 record of 2:54:48.

Meanwhile, following several serious accidents in his early 70’s, Keston continued to train at low intensity. He loved the outdoors, the sun, the wind and the rain. Typically, he ran for about two hours every third day, and walked briskly in woodland for a similar time on each of the intervening two days. His philosophy on aging was: ‘be kind to everybody, and keep moving, and be involved in life.’ (NYTimes) He eventually returned to racing, setting national age group record at distances from 1 mile to the marathon. At age 80, he ran a half-marathon in 1:39:25, an M80-84 world record at the time though Ed Whitlock subsequently shaved 28 seconds of that record. Keston continued to run into his mid-90’s, but Covid intervened. He died in February of this year, at age 97, from complications of Covid. The first sentence on his Wikipedia page acknowledged the diversity of his talents: ‘…John Keston, was a British-born American stage actor and singer who was best known as a world record-breaking runner.’

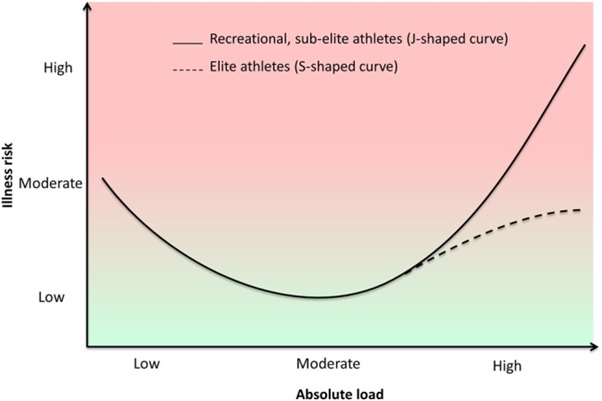

All seven of the elite elderly marathoners discussed in my blog posts in 2014 were superb runners, but their training programmes were diverse. Examination of the details indicated that those who adopted a more polarised training regimen, with a large amount of easy running and a small proportion of intense running, achieved greater longevity at elite level.

The undisputed doyen of that septet of elite elderly marathoners was Ed Whitlock. At the heart of his unique training programme were sessions of slow running with a shuffling gait, three hours per day in his 70s and extending up to four hours per day in his 80’s, most days of the week, run on the paths of a local cemetery near his home in Mississauga, Ontario. He interspersed these long slow shuffling sessions with frequent races, usually 5 or 10 km, in which the shuffling stride of his slow circuits of the cemetery was replaced by a graceful and powerful long stride. He continued to set Age Group World Records at distances ranging for 1500m to marathon into his mid-eighties. A few months before his death from prostate cancer shortly after his 86th birthday, he set a M85-89 marathon world record of 3:36:38.

For more than fifteen years, no-one could match Ed’s performances. But now, in the 4 years that have embraced the Covid pandemic, three contenders have emerged. First was Gene Dykes. He ran three sub three-hour marathons at age 70, culminating in an astounding time of 2:54:23, taking 25 seconds off Ed’s time, in Jacksonville, Florida, in December, 2018. However, Gene’s time was not ratified as a M70-74 world record by IAAF due to a technicality. Eleven months later Covid emerged in Wuhan, China.

Then, almost four years later in 2021, as Covid declined, and Spring marathons re-scheduled to the Fall on account of Covid, took place, two more septuagenarians broke the 3 hour barrier. Seventy-one year old Jo Schoonbroodt from Maastricht, Holland, recorded 2:56:37 at the Maasmarathon in Visé, Belgium on 19th September. Then three weeks later, Michael Sheridan, from Newbury in England, recorded 2:59.37 in the re-scheduled London Marathon. Another 2 weeks later, Schoonbroodt recorded 2:55:20 in Rotterdam. When the Rotterdam marathon returned to its usual spring date in April 2022, Schoonbroodt broke the three hour barrier for a third time, recording 2:55:23. Then, a month later in the Maasmarathon in Visé in May, Schoonbroodt finally broke Ed Whitlock’s M70-74 World Record in a time of 2:54:19.

The four septuagenarian sub-three hour marathoners employed widely differing training programmes and bring sharply divergent mental approaches to their running. In my next few posts I will compare and contrast their approaches with the aim of re-evaluating my former speculative conclusions regarding strategies for optimising longevity as a distance runner. In the remainder of this post I will speculate on the role that Covid might have played in shaping the rather dramatic shift in the masters marathon landscape.

Gene Dykes

Gene had joined the select group of septuagenarian sub-three hour marathoners almost a year before Covid emerged, but there were two reasons why I have been intrigued by the question of what effect Covid has had on his subsequent running. The main reason is that my motivating question is what type of training provides a basis for running longevity. If Gene’s running is to contribute to answering this question, it is crucial to discover whether or not he could sustain or even improve on his outstanding performance in Jacksonville in December 2018. The second reason is the more trivial issue of the neatness of the list of World Record holders, left in an ambiguous state by the failure of the IAAF to ratify Gene’s time of 2:54:23 as a M70-74 world record, on a techicality. Gene wrote on his Facebook page on 22nd Dec 2018: ‘although the Jacksonville Marathon is certified by the USATF, the race was not sanctioned by the USATF, and both must be valid for recognition of records by USATF/IAAF…..I am still proud of what I’ve accomplished – it just looks like it’s not going to be “official”. That said, I still have four more years to do it right, and, who knows, that might happen sooner than you think!’ In his characteristic unpretentious manner, Gene made light of the non-ratification in a You-Tube video: ‘Even if I were to get my Jacksonville time ratified as a World Record, it would still be Ed Whitlock 35, Gene Dykes 1’ referring to Ed’s 35 Masters World Records. Nonetheless, a lot of us hoped he would ‘do it right’ soon to tidy up the record book. And then Covid struck. Would the pandemic sabotage his chances of doing so before increasing age made the challenge too great?

Returning to my main question of the conclusions we might draw regarding running longevity, Gene’s training was dramatically different from that of Ed Whitlock. Both did a huge volume of running. However, whereas most of Ed’s training consisted of slow shuffling for hours at a time around the local cemetery interspersed with regular 5 or 10 K races, Gene engaged in a very demanding training programme under the guidance of his coach, John Goldthorp. This programme included a substantial amount of tempo running (as described in more detail in my post in November 2018). In addition, Gene competed regularly in a dizzying variety of events, including a daunting number of tough ultras (described on his website). In 2017, along with numerous shorter events, he completed three 200-mile ultras in three consecutive months. Around that time, he was running 1300 miles per year in races within a total of 3000 miles per year including training. In 2018, he won 10 age-group USATF championships, at distances ranging from 1500 metres on the track (in 5:17) to 100 miles in the Rocky Racoon Trail race (in 23:41:22), in addition to three sub-three-hour marathons, culminating in the Jacksonville Marathon.

In 2019 he ran another staggering mixture of ultras, marathons and shorter distance races despite his activities being curtailed by a broken shoulder sustained in a fall during a trail run. Notable among these multiple events was an age-group victory in the Boston Marathon in 2:58:50 in April (M71 Single Age World Record, pending); two USATF national records (100 miles in 21:04:3, and the 24-hour record of 111.79 miles) in the Dawn to Dusk to Dawn ultra in May; and victory in the USATF 100 mile trail championship in December.

He planned a similarly densely-stacked schedule of races in 2020. In March he ran the Los Angeles marathon with his daughter Hilary, as a training run 3:25:39. Then the remaining events from March to December were cancelled due to Covid.

In 2021 he ran nine ultras, including the Caumsett 50 Km in 3:56:43, setting an M70-74 world record. At the USATF championships he won the 5Km, 10Km, and 2Km steeplechase for his age-group. Unfortunately, in August he sustained a serious hamstring injury during the Hood to Coast relay. This seriously limited his performance in the rescheduled London Marathon on 3rd October, when he limped to the finish in 5:37:56, and in Boston a week later when he managed 3:30:02.

In 2022, in April he achieved an age group victory in the Boston marathon in 3:12:28, but once again injury damaged his prospects later in the year. In May he tripped and fell, seriously damaging his thigh, during the Soria Moria 100 miler in Norway. In October, in the London marathon he achieved an age group victory in the disappointing time of 3:19:50.

In conclusion, the races cancelled due to Covid in 2020 proved to be one limiting factor, but overall, it was his dizzying mixture of races across the spectrum of distances and several serious injuries sustained during falls in his off-road races that got in the way of another 2:54:xx marathon. Jo Schoonbroodt commented, in a podcast recorded after Jo’s 2:54:19 marathon, that in Gene’s congratulatory message, Gene said he had no plans to challenge Jo’s M70-74 record, but instead would be going for Ed Whitlock’s 3:04:54 M75-79 World Record next year.

Jo Schoonbroodt

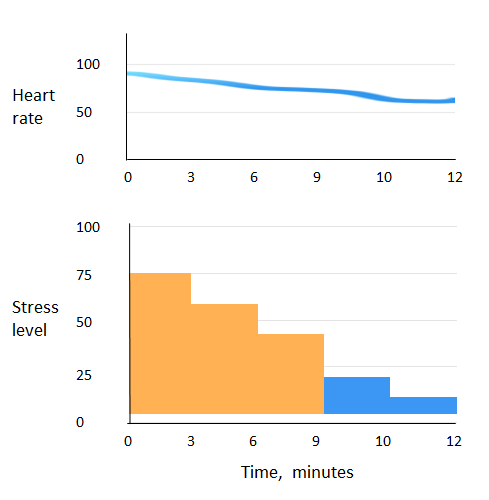

In that podcast, Jo suggested that decreased racing during the Covid interlude might have helped him on the way to his subsequent sub-3 hour marathons.

As a youngster, Jo had not been interested in sports. He took up running at age 36, running at a comfortable pace to and from work. By age 54 he had achieved a marathon personal best of 2:41:xx. He continued to run according to his feeling on the day, with no training plan. He maintained that running should be a lot of fun. He happily stopped on the way to chat with friends. Through his 50’s and 60’s he continued to run marathons, usually achieving times less than three hours. However by his late 60’s he was slowing slightly, and his times were typically a few minutes over the 3 hour mark.

As a result of Covid, he did not race in 2021 until the rescheduled Maasmarathon in Visé in September. He had been running 25 km per day, up to 3 runs per day; sometimes including walking. He covered 7242 Km in the year, enjoying natural trails more than roads. Although his native Holland is famously flat, he sometimes ran on the hillier terrain nearby in Belgium. In the Maasmarathon at age 71, he recorded 2:56:37 It was his 72nd sub-3 hour marathon. It is likely that the large volume of low intensity training in the preceding year made that sub-3 marathon at age 70+ possible. A month later he ran his 73rd sub-3 hour marathon in Rotterdam and then 6 months later, again in Rotterdam, he again finished in under 3 hours. Finally, in the Maasmarahton, by now back at its usual Spring date, Jo took 29 seconds off Ed Whitlock’s M70-74 world record. While the long slow running in the preceding year was probably a major factor, it is also noteworthy that he ran in Asics Metaspeed Sky shoes. It is possible that the embedded carbon plates also contributed to his faster race times.

Michael Sheridan

Mike Sheridan achieved his first V70 sub-three hour marathon in London on October 2021. As in the case of Jo, the Covid interlude probably played a part in shaping his performance, though the influence was less clear-cut. His Marathon Talk podcast provides the background.

In his school days Mike had competed at county-level in cross-country events. Subsequently he did no running for 45 years though he kept himself generally fit. He restarted running at age 60, in 2010. That year he ran the Dexia Route du Vin Half-Marathon in Luxembourg in a time of 1:40:59. Three years later he completed the Boston marathon in 3:21:09. In the subsequent year, he embarked upon a training programme based on a systematic progression from his best 5K time, with targets set for progressively longer distances based on his 5K pace. He also participated in strictly supervised strenuous weightlifting sessions, twice per week. He considers that these weightlifting sessions created the bedrock of his current marathon performance. Typically, in a session he lifted a total of 5000 lbs in 15 minutes. These gruelling sessions built not only physical but also mental strength. The following year, 2014, his finishing time in Boston was 3:12:54. In the following September in Berlin he clocked 2:59:14. He achieved his lifetime best of 2:54:24 in London in 2016, at age 66.

Unfortunately, he suffered a serious injury to his pelvis in a bicycle accident in 2017 that set him back 18 months. Once recovered, he prepared for the London marathon in 2019 at age 69 under coach Sarah Crouch. In demanding sessions such as 10x800m he developed the mental strength to drive hard to the finish. In that marathon, he recorded 2:57:04 with a 90 second negative split, running the final 5k in 20:11. Later that year in Chicago he started too fast and hit the wall badly at mile 23, finishing in 3:04:55. Then Covid intervened.

He planned to run the 2021 London marathon which had been rescheduled to October, shortly after his 72 birthday. In the first half of 2021 he engaged in a low-key build-up, with less focussed training due to uncertainty about a possible cancellation and limited opportunity for racing. He began training in earnest in July, running 45-52 miles per week. His long runs included a lot of intense pace work, in the form of either surges of duration increasing from 2 to 6 minutes, or progressive runs. His highest mileage was 61 miles per week in August. His final long run at the end of August was a 20 miler, starting with 10 easy miles and then increasing pace progressively to the end.

On race day, he started with no special expectation about his likely finishing time. He reached the half-way mark in 90 minutes. Spurred on by encouragement from his sister at the road-side, he set his sights on a negative split despite the anticipated hard slog into the head wind. At mile 23 he realised that a sub-three-hour time might be possible. The mental strength developed during the gruelling weightlifting sessions and honed by coach Sarah Crouch in 2019 had stood him in good stead. He pushed-on to finish in 2:59.37. Although the low-key build up due to Covid had created uncertainty and might have been a handicap, it is also plausible that the relaxed period of training for much of the time since Chicago two years previously had in fact prepared him to profit maximally from the intense training in July and August.

In response to questioning from Martin Yelling in the Marathon Talk podcast, he speculated that in light of being still at a relatively early phase of his running career and allowing for the 18 months lost following serious bicycle accident in 2017, it remained reasonable to aim for 2:54:xx before age 75. After that, it would be time to set Ed Whitlock’s M75-79 world record of 3:04:54 as his target.

The future

In the New Year I plan to compare and contrast the diverse training and mental approaches of these four septuagenarian sub three-hour marathoners, Ed Whitlock Gene Dykes, Jo Schoonbroodt and Michael Sheridan, in the light of current understanding of the processes of human aging.